Deeper Dive

Integrity is an AI image detector that classifies images by looking for signs of authenticity rather than artificialness. I was partially inspired in the fall of 2024 during Hurricane Helene, when scam artists spread misinformation by creating fake charity fundraisers and using AI-generated images of abandoned young children to lure unsuspecting donors. My aunt lives in Asheville, North Carolina, and it took time for her to recover from the devastation. What we heard from her did not match some of the photos we saw, which increased my urgency to create Integrity.

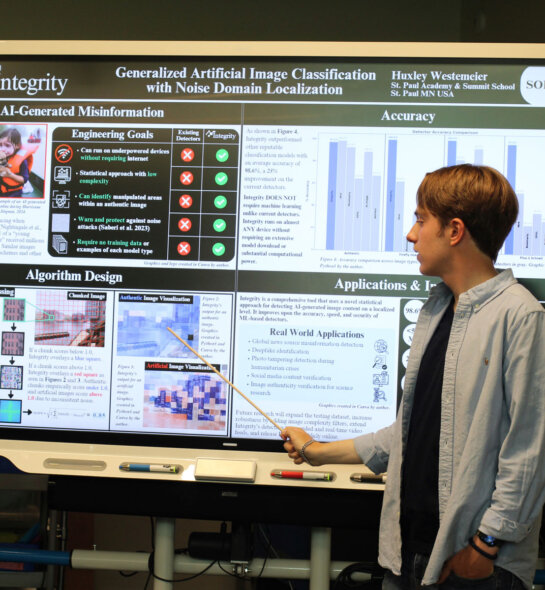

Integrity is significant because it uses a purely statistical approach in a field dominated by machine learning models that are computationally expensive and unable to run locally. Because Integrity is lightweight, can run on any device, doesn’t require an updated model each time a new generator version is released, and is more robust, it is a simple yet powerful tool to combat AI-generated misinformation.

Integrity helps improve quality of life by providing an accessible, reliable tool to counter global misinformation — which the World Economic Forum recently identified as the greatest threat to international security in the next two years. Malicious AI content can spread propaganda, incite violence, and erode trust. Integrity empowers anyone, especially those unfamiliar with machine learning or detection algorithms, to verify the content they see daily in news articles or other sources. It can also show users specific sections of an image that are likely manipulated. By making effective image authentication — with over 93% accuracy on a public deepfake dataset, 97% on my custom dataset, and 100% accuracy on authentic images — available to more people than ever before, Integrity can reduce the spread of misinformation globally.